A Generative model aims to learn and understand a dataset’s true distribution and create new data from it using unsupervised learning. These models (such as StyleGAN) have had mixed success as it is quite difficult to understand the complexities of certain probability distributions.

In order to sidestep these roadblocks, The Adversarial Nets Framework was created whereby the generative model is pitted against an adversary: a discriminative model that learns to determine whether a sample is from the model distribution or the data distribution.

The generative model generates samples by passing random noise through a multilayer perceptron, and the discriminative model is also a multilayer perceptron. We refer to this case as Adversarial Nets.

The paper that presents this The Adversarial Framework can be found here along with the code used for the framework.

Which Face is Real?

Which Face Is Real? was developed by Jevin West and Carl Bergstrom from the University of Washington as part of the Calling Bullshit Project.

“Computers are good, but your visual processing systems are even better. If you know what to look for, you can spot these fakes at a single glance — at least for the time being. The hardware and software used to generate them will continue to improve, and it may be only a few years until humans fall behind in the arms race between forgery and detection.” – Jevin West and Carl Bergstrom

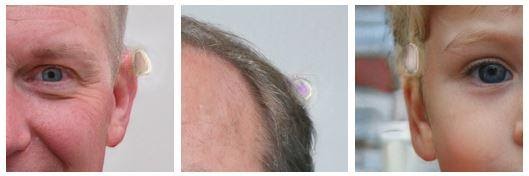

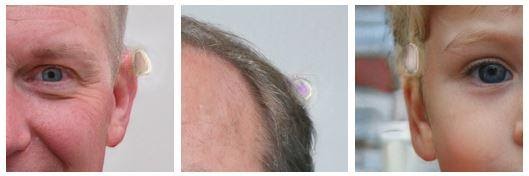

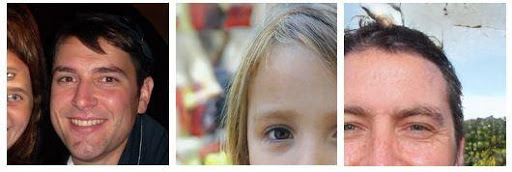

How Do You Tell the Difference Between the Images?

The differences are determined in 6 main areas:

Water splotches

- The algorithm produces shiny blobs that look somewhat like water splotches on old photographic prints.

Hair

- Disconnected strands, hair that’s too straight, or too streaked will be common problems when generating hair.

Asymmetry

- A common problem is asymmetry. Often the frame will take one style at the left and another at the right, or there will be a wayfarer-style ornament on one side but not on the other. Other times the frame will just be crooked or jagged. In addition, asymmetries in facial hair, different earrings in the left and right ear, and different forms of collar or fabric on the left and right side can be present.

Background problems

- The background of the image may manifest in weird states like blurriness or misshapen objects. This is due to the fact that the neural net is trained on the face and less emphasis is given to the background of the image.

Fluorescent bleed

- Fluorescent colors can sometimes bleed into the hair or face from the background. And observers can mistake this for colored hair.

Teeth

- Teeth are also hard to render and can come out as odd-shaped, asymmetric, or for those that can identify teeth, sometimes three incisors can appear in the image.

Testing Out the StyleGAN Algorithm

All the code for the StyleGAN has been open-sourced in the stylegan repository. It gives details on how you can run the styleGAN algorithm yourself. So let’s get started with sharing some of the basic system requirements.

System Requirements

- Both Linux and Windows are supported, but we strongly recommend Linux for performance and compatibility reasons.

- 64-bit Python 3.6 installation. We recommend Anaconda3 with numpy 1.14.3 or newer.

- TensorFlow 1.10.0 or newer with GPU support.

- One or more high-end NVIDIA GPUs with at least 11GB of DRAM. We recommend NVIDIA DGX-1 with 8 Tesla V100 GPUs.

- NVIDIA driver 391.35 or newer, CUDA toolkit 9.0 or newer, cuDNN 7.3.1 or newer.

A minimal example to try a pre-trained example of the styleGAN is given in pretrained_example.py. It is executed as follows:

- > python pretrained_example.py

- Downloading https://drive.google.com/uc?id... .... done

- Gs Params OutputShape WeightShape

- --- --- --- ---

- latents_in - (?, 512) -

- ...

- images_out - (?, 3, 1024, 1024) -

- --- --- --- ---

- Total 26219627

- Once you execute ‘python pretrained_example.py’, type in ‘ls results’ to see the results.

- > ls results

- example.png # https://drive.google.com/uc?id...

Prepare the Datasets for Training

The training and evaluation scripts operate on datasets stored as multi-resolution TFRecords. Each dataset is represented by a directory containing the same image data in several resolutions to enable efficient streaming. There is a separate *.tfrecords file for each resolution, and if the dataset contains labels, they are stored in a separate file as well. By default, the scripts expect to find the datasets at datasets/<NAME>/<NAME>-<RESOLUTION>.tfrecords. The directory can be changed by editing config.py:

- result_dir = 'results'

- data_dir = 'datasets'

- cache_dir = 'cache'

To obtain the FFHQ dataset (datasets/ffhq), please refer to the Flickr-Faces-HQ repository.

To obtain the CelebA-HQ dataset (datasets/celebahq), please refer to the Progressive GAN repository.

To obtain other datasets, including LSUN, please consult their corresponding project pages. The datasets can be converted to multi-resolution TFRecords using the provided dataset_tool.py:

- > python dataset_tool.py create_lsun datasets/lsun-bedroom-full ~/lsun/bedroom_lmdb --resolution 256

- > python dataset_tool.py create_lsun_wide datasets/lsun-car-512x384 ~/lsun/car_lmdb --width 512 --height 384

- > python dataset_tool.py create_lsun datasets/lsun-cat-full ~/lsun/cat_lmdb --resolution 256

- > python dataset_tool.py create_cifar10 datasets/cifar10 ~/cifar10

- > python dataset_tool.py create_from_images datasets/custom-dataset ~/custom-images

Training the StyleGAN Networks

Once the datasets are set up, you can train your own StyleGAN networks as follows:

- Edit train.py to specify the dataset and training configuration by uncommenting or editing specific lines.

- Run the training script with python train.py.

- The results are written to a newly created directory results/<ID>-<DESCRIPTION>.

- The training may take several days (or weeks) to complete, depending on the configuration.

By default, train.py is configured to train the highest-quality StyleGAN (configuration F in Table 1) for the FFHQ dataset at 1024×1024 resolution using 8 GPUs.

Expected StyleGAN Training Time

Below you will find NVIDIA’s reported expected training times for default configuration of the train.py script (available in the stylegan repository) on a Tesla V100 GPU for the FFHQ dataset (available in the stylegan repository).

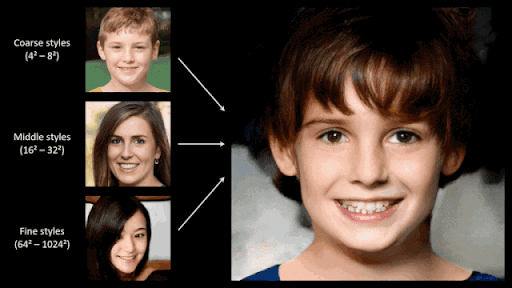

StyleGAN: Bringing it All Together

The algorithm behind this amazing app was the brainchild of Tero Karras, Samuli Laine and Timo Aila at NVIDIA and called it StyleGAN. The algorithm is based on earlier work by Ian Goodfellow and colleagues on General Adversarial Networks (GAN’s).

Generative models have a limitation in which it’s hard to control the characteristics such as facial features from photographs. NVIDIA’s StyleGAN is a fix to this limitation. The model allows the user to tune hyper-parameters that can control for the differences in the photographs.

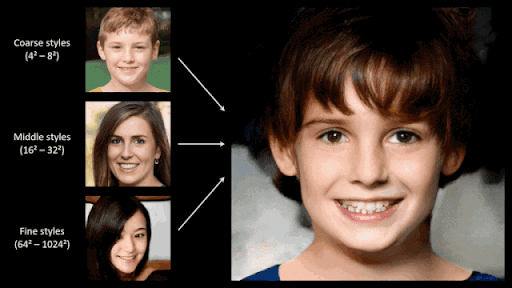

StyleGAN solves the variability of photos by adding styles to images at each convolution layer. These styles represent different features of photos of a human, such as facial features, background color, hair, wrinkles, etc. The model generates two images A and B and then combines them by taking low-level features from A and the rest from B. At each level, different features (styles) are used to generate an image:

- Coarse styles (resolution between 4 to 8) – pose, hair, face, shape

- Middle styles (resolution between 16 to 32) – facial features, eyes

- Fine styles (resolution between 64 to 1024)- color scheme

Have you tested out StyleGAN before? Or is this your first time? Let us know in the comment section below. We are always looking for new and creative ways from the community for any technologies or frameworks.

Other Helpful Resources for GANs and StyleGAN

- See here for the official paper on StyleGAN and the Tensorflow Implementation.

- See here for the official paper or StyleGAN2 and improving the Image Quality of StyleGAN, and the Tensorflow Implementation.

- Read our blog on The Benefits of Adversarial AI.

Which Face is Real? Applying StyleGAN to Create Fake People

A Generative model aims to learn and understand a dataset’s true distribution and create new data from it using unsupervised learning. These models (such as StyleGAN) have had mixed success as it is quite difficult to understand the complexities of certain probability distributions.

In order to sidestep these roadblocks, The Adversarial Nets Framework was created whereby the generative model is pitted against an adversary: a discriminative model that learns to determine whether a sample is from the model distribution or the data distribution.

The generative model generates samples by passing random noise through a multilayer perceptron, and the discriminative model is also a multilayer perceptron. We refer to this case as Adversarial Nets.

The paper that presents this The Adversarial Framework can be found here along with the code used for the framework.

Which Face is Real?

Which Face Is Real? was developed by Jevin West and Carl Bergstrom from the University of Washington as part of the Calling Bullshit Project.

“Computers are good, but your visual processing systems are even better. If you know what to look for, you can spot these fakes at a single glance — at least for the time being. The hardware and software used to generate them will continue to improve, and it may be only a few years until humans fall behind in the arms race between forgery and detection.” – Jevin West and Carl Bergstrom

How Do You Tell the Difference Between the Images?

The differences are determined in 6 main areas:

Water splotches

- The algorithm produces shiny blobs that look somewhat like water splotches on old photographic prints.

Hair

- Disconnected strands, hair that’s too straight, or too streaked will be common problems when generating hair.

Asymmetry

- A common problem is asymmetry. Often the frame will take one style at the left and another at the right, or there will be a wayfarer-style ornament on one side but not on the other. Other times the frame will just be crooked or jagged. In addition, asymmetries in facial hair, different earrings in the left and right ear, and different forms of collar or fabric on the left and right side can be present.

Background problems

- The background of the image may manifest in weird states like blurriness or misshapen objects. This is due to the fact that the neural net is trained on the face and less emphasis is given to the background of the image.

Fluorescent bleed

- Fluorescent colors can sometimes bleed into the hair or face from the background. And observers can mistake this for colored hair.

Teeth

- Teeth are also hard to render and can come out as odd-shaped, asymmetric, or for those that can identify teeth, sometimes three incisors can appear in the image.

Testing Out the StyleGAN Algorithm

All the code for the StyleGAN has been open-sourced in the stylegan repository. It gives details on how you can run the styleGAN algorithm yourself. So let’s get started with sharing some of the basic system requirements.

System Requirements

- Both Linux and Windows are supported, but we strongly recommend Linux for performance and compatibility reasons.

- 64-bit Python 3.6 installation. We recommend Anaconda3 with numpy 1.14.3 or newer.

- TensorFlow 1.10.0 or newer with GPU support.

- One or more high-end NVIDIA GPUs with at least 11GB of DRAM. We recommend NVIDIA DGX-1 with 8 Tesla V100 GPUs.

- NVIDIA driver 391.35 or newer, CUDA toolkit 9.0 or newer, cuDNN 7.3.1 or newer.

A minimal example to try a pre-trained example of the styleGAN is given in pretrained_example.py. It is executed as follows:

- > python pretrained_example.py

- Downloading https://drive.google.com/uc?id... .... done

- Gs Params OutputShape WeightShape

- --- --- --- ---

- latents_in - (?, 512) -

- ...

- images_out - (?, 3, 1024, 1024) -

- --- --- --- ---

- Total 26219627

- Once you execute ‘python pretrained_example.py’, type in ‘ls results’ to see the results.

- > ls results

- example.png # https://drive.google.com/uc?id...

Prepare the Datasets for Training

The training and evaluation scripts operate on datasets stored as multi-resolution TFRecords. Each dataset is represented by a directory containing the same image data in several resolutions to enable efficient streaming. There is a separate *.tfrecords file for each resolution, and if the dataset contains labels, they are stored in a separate file as well. By default, the scripts expect to find the datasets at datasets/<NAME>/<NAME>-<RESOLUTION>.tfrecords. The directory can be changed by editing config.py:

- result_dir = 'results'

- data_dir = 'datasets'

- cache_dir = 'cache'

To obtain the FFHQ dataset (datasets/ffhq), please refer to the Flickr-Faces-HQ repository.

To obtain the CelebA-HQ dataset (datasets/celebahq), please refer to the Progressive GAN repository.

To obtain other datasets, including LSUN, please consult their corresponding project pages. The datasets can be converted to multi-resolution TFRecords using the provided dataset_tool.py:

- > python dataset_tool.py create_lsun datasets/lsun-bedroom-full ~/lsun/bedroom_lmdb --resolution 256

- > python dataset_tool.py create_lsun_wide datasets/lsun-car-512x384 ~/lsun/car_lmdb --width 512 --height 384

- > python dataset_tool.py create_lsun datasets/lsun-cat-full ~/lsun/cat_lmdb --resolution 256

- > python dataset_tool.py create_cifar10 datasets/cifar10 ~/cifar10

- > python dataset_tool.py create_from_images datasets/custom-dataset ~/custom-images

Training the StyleGAN Networks

Once the datasets are set up, you can train your own StyleGAN networks as follows:

- Edit train.py to specify the dataset and training configuration by uncommenting or editing specific lines.

- Run the training script with python train.py.

- The results are written to a newly created directory results/<ID>-<DESCRIPTION>.

- The training may take several days (or weeks) to complete, depending on the configuration.

By default, train.py is configured to train the highest-quality StyleGAN (configuration F in Table 1) for the FFHQ dataset at 1024×1024 resolution using 8 GPUs.

Expected StyleGAN Training Time

Below you will find NVIDIA’s reported expected training times for default configuration of the train.py script (available in the stylegan repository) on a Tesla V100 GPU for the FFHQ dataset (available in the stylegan repository).

StyleGAN: Bringing it All Together

The algorithm behind this amazing app was the brainchild of Tero Karras, Samuli Laine and Timo Aila at NVIDIA and called it StyleGAN. The algorithm is based on earlier work by Ian Goodfellow and colleagues on General Adversarial Networks (GAN’s).

Generative models have a limitation in which it’s hard to control the characteristics such as facial features from photographs. NVIDIA’s StyleGAN is a fix to this limitation. The model allows the user to tune hyper-parameters that can control for the differences in the photographs.

StyleGAN solves the variability of photos by adding styles to images at each convolution layer. These styles represent different features of photos of a human, such as facial features, background color, hair, wrinkles, etc. The model generates two images A and B and then combines them by taking low-level features from A and the rest from B. At each level, different features (styles) are used to generate an image:

- Coarse styles (resolution between 4 to 8) – pose, hair, face, shape

- Middle styles (resolution between 16 to 32) – facial features, eyes

- Fine styles (resolution between 64 to 1024)- color scheme

Have you tested out StyleGAN before? Or is this your first time? Let us know in the comment section below. We are always looking for new and creative ways from the community for any technologies or frameworks.

Other Helpful Resources for GANs and StyleGAN

- See here for the official paper on StyleGAN and the Tensorflow Implementation.

- See here for the official paper or StyleGAN2 and improving the Image Quality of StyleGAN, and the Tensorflow Implementation.

- Read our blog on The Benefits of Adversarial AI.