Showdown of the Data Center GPUs: A100 vs V100S

For this blog article, we conducted deep learning performance benchmarks for TensorFlow on the NVIDIA A100 GPUs. We also compared these GPU’s with their top of the line predecessor the Volta powered NVIDIA V100S.

Our Deep Learning Server was fitted with 8 NVIDIA A100 PCIe GPUs. We ran the standard “tf_cnn_benchmarks.py” benchmark script found in the official TensorFlow github. The neural networks we tested were: ResNet50, ResNet152, Inception v3, Inception v4. Furthermore, we ran the same tests using 1, 2, 4, and 8 GPU configurations. Determined batch size was the largest that could fit into available GPU memory.

Key Points and Observations

- The NVIDIA A100 is an exceptional GPU for deep learning with performance unseen in previous generations.

- The NVIDIA A100 scales very well up to 8 GPUs (and probably more had we tested) using FP16 and FP32.

- When compared to the V100S, in most cases the A100 offers 2x the performance in FP16 and FP32.

Interested in upgrading your deep learning server?

Learn more about Exxact deep learning servers featuring NVIDIA GPUs

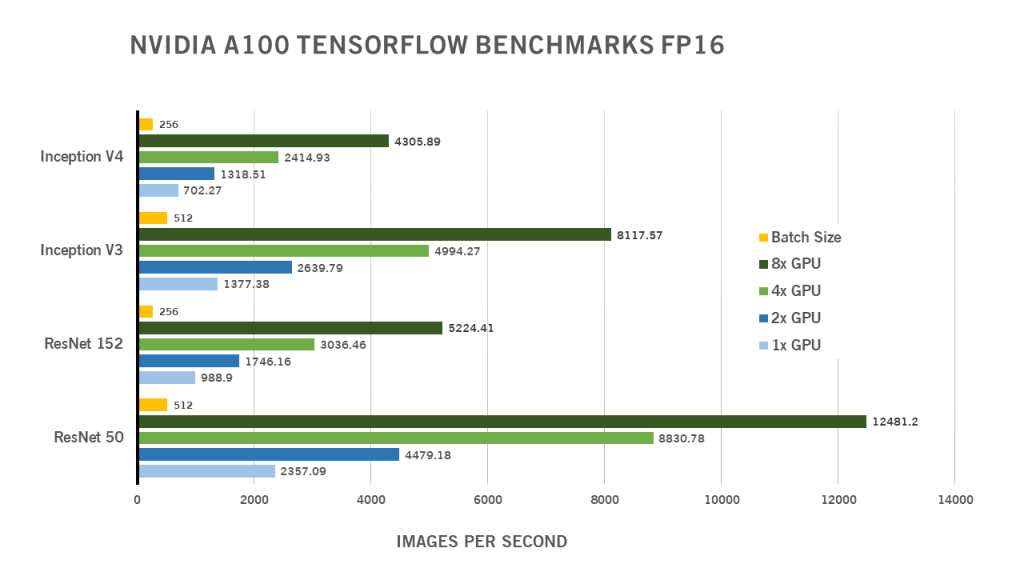

NVIDIA A100 Deep Learning Benchmarks FP16

| 1x GPU | 2x GPU | 4x GPU | 8x GPU | Batch Size | |

| ResNet 50 | 2357.09 | 4479.18 | 8830.78 | 12481.2 | 512 |

| ResNet 152 | 988.9 | 1746.16 | 3036.46 | 5224.41 | 256 |

| Inception V3 | 1377.38 | 2639.79 | 4994.27 | 8117.57 | 512 |

| Inception V4 | 702.27 | 1318.51 | 2414.93 | 4305.89 | 256 |

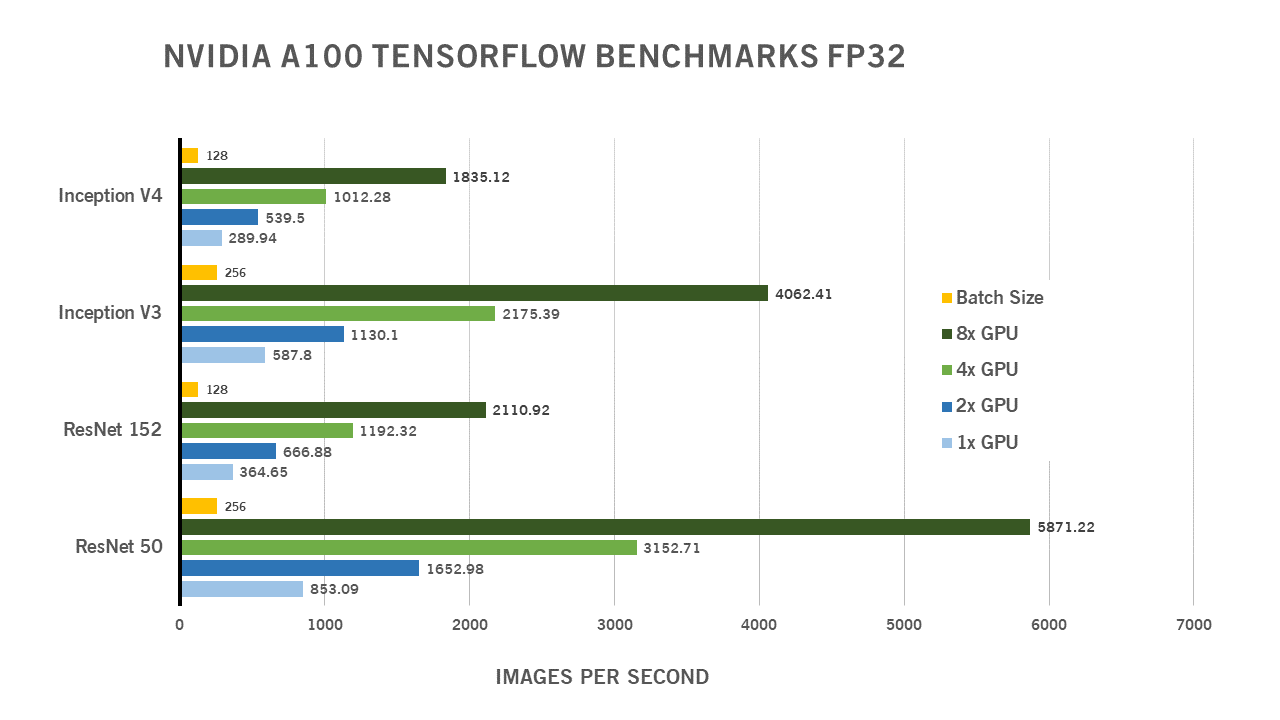

NVIDIA A100 Deep Learning Benchmarks FP32

| 1x GPU | 2x GPU | 4x GPU | 8x GPU | Batch Size | |

| ResNet 50 | 853.09 | 1652.98 | 3152.71 | 5871.22 | 256 |

| ResNet 152 | 364.65 | 666.88 | 1192.32 | 2110.92 | 128 |

| Inception V3 | 587.8 | 1130.1 | 2175.39 | 4062.41 | 256 |

| Inception V4 | 289.94 | 539.5 | 1012.28 | 1835.12 | 128 |

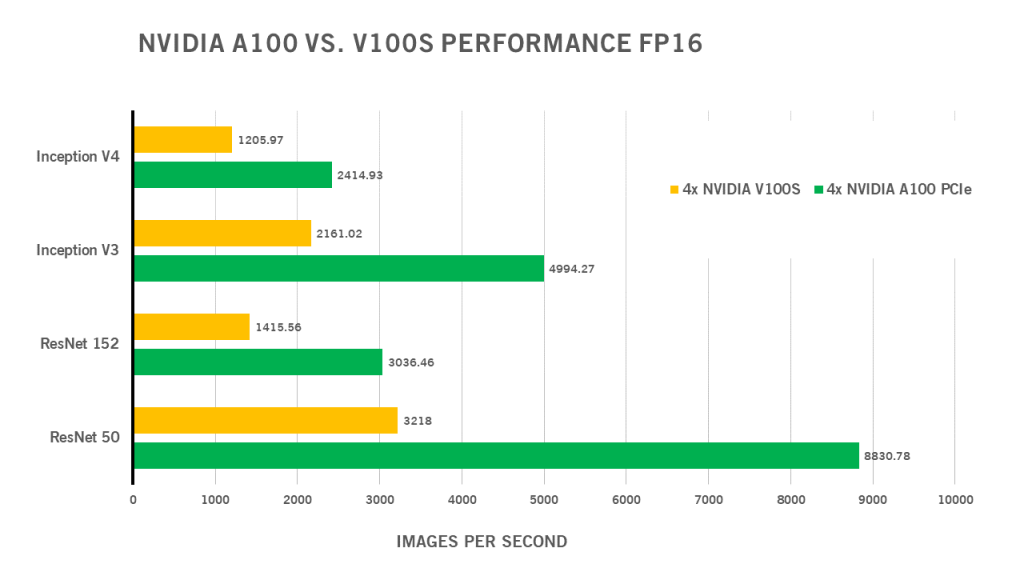

NVIDIA A100 PCIe vs NVIDIA V100S PCIe FP16 Comparison

The NVIDIA A100 simply outperforms the Volta V100S with a performance gains upwards of 2x. These tests only show image processing, however the results are in line with previous tests done by NVIDIA showing similar performance gains.

| 4x NVIDIA A100 PCIe | 4x NVIDIA V100S | |

| ResNet 50 | 8830.78 | 3218 |

| ResNet 152 | 3036.46 | 1415.56 |

| Inception V3 | 4994.27 | 2161.02 |

| Inception V4 | 2414.93 | 1205.97 |

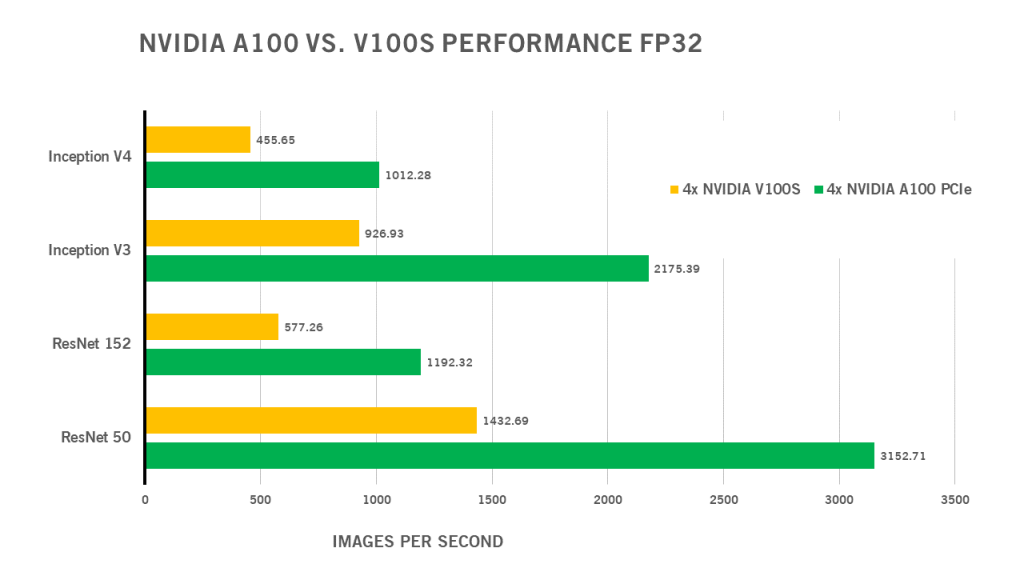

NVIDIA A100 PCIe vs NVIDIA V100S PCIe FP32 Comparison

As with the FP16 tests, the A100 handily outperforms the V100S by a factor of 2.

| 4x NVIDIA A100 PCIe | 4x NVIDIA V100S | |

| ResNet 50 | 3152.71 | 1432.69 |

| ResNet 152 | 1192.32 | 577.26 |

| Inception V3 | 2175.39 | 926.93 |

| Inception V4 | 1012.28 | 455.65 |

Benchmark System Specs

| System | Exxact AI Server |

| CPU | 2x AMD EPYC 7552 |

| GPU | NVIDIA A100 PCIe |

| System Memory | 512GB |

| Storage | 2x 480GB + 3.84TB |

| TensorFlow Version | NVIDIA Release 20.10-tf2 (build 16775790) TensorFlow Version 2.3.1 |

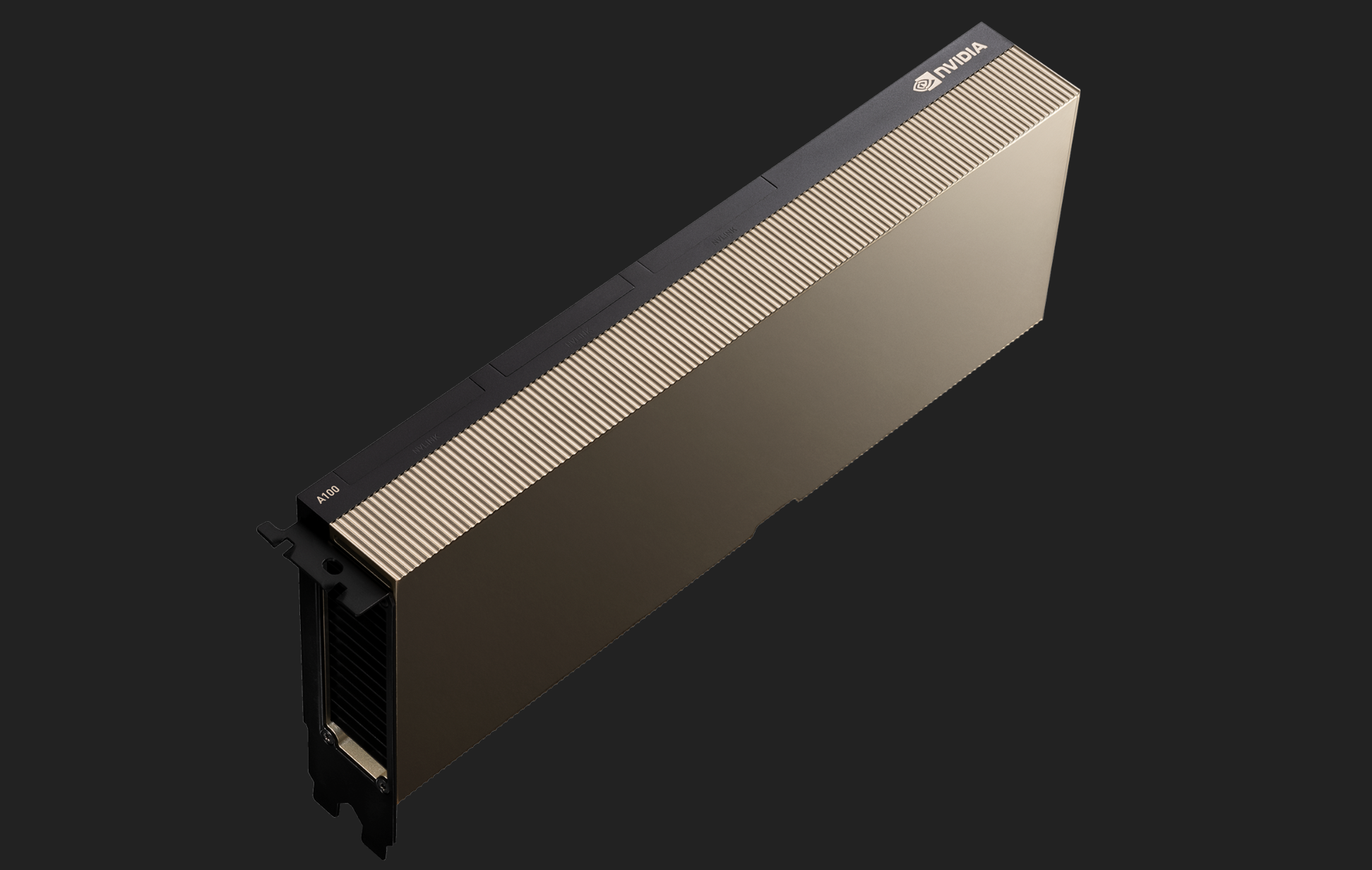

More Info and Specs About NVIDIA A100 PCIe GPU

NVIDIA A100 Tensor Core GPU delivers unprecedented acceleration and flexibility to power the world’s highest-performing elastic data centers for AI, data analytics, and HPC applications. As the engine of the NVIDIA data center platform, A100 provides massive performance upgrades over V100 GPUs and can efficiently scale up to thousands of GPUs, or be partitioned into seven isolated GPU instances to accelerate workloads of all sizes.

| Peak FP64 | 9.7 TF |

| Peak FP64 Tensor Core | 19.5 TF |

| Peak FP32 | 19.5 TF |

| Peak FP32 Tensor Core | 156 TF | 312 TF |

| Peak BFLOAT16 Tensor Core | 312 TF | 624 TF |

| Peak FP16 Tensor Core | 312 TF | 624 TF |

| Peak INT8 Tensor Core | 624 TOPS | 1,248 TOPS |

| Peak INT4 Tensor Core | 1,248 TOPS | 2,496 TOPS |

| GPU Memory | 40GB |

| GPU Memory Bandwidth | 1,555 GB/s |

| Interconnect | NVIDIA NVLink 600 GB/s PCIe Gen4 64 GB/s |

| Multi-Instance GPUs | Various instance sizes with up to 7 MIGs at 5GB |

| Form Factor | PCIe |

| Max TDP Power | 250 W |

Have any questions about NVIDIA GPUs or AI Servers?

Contact Exxact Today

NVIDIA A100 Deep Learning Benchmarks for TensorFlow

Showdown of the Data Center GPUs: A100 vs V100S

For this blog article, we conducted deep learning performance benchmarks for TensorFlow on the NVIDIA A100 GPUs. We also compared these GPU’s with their top of the line predecessor the Volta powered NVIDIA V100S.

Our Deep Learning Server was fitted with 8 NVIDIA A100 PCIe GPUs. We ran the standard “tf_cnn_benchmarks.py” benchmark script found in the official TensorFlow github. The neural networks we tested were: ResNet50, ResNet152, Inception v3, Inception v4. Furthermore, we ran the same tests using 1, 2, 4, and 8 GPU configurations. Determined batch size was the largest that could fit into available GPU memory.

Key Points and Observations

- The NVIDIA A100 is an exceptional GPU for deep learning with performance unseen in previous generations.

- The NVIDIA A100 scales very well up to 8 GPUs (and probably more had we tested) using FP16 and FP32.

- When compared to the V100S, in most cases the A100 offers 2x the performance in FP16 and FP32.

Interested in upgrading your deep learning server?

Learn more about Exxact deep learning servers featuring NVIDIA GPUs

NVIDIA A100 Deep Learning Benchmarks FP16

| 1x GPU | 2x GPU | 4x GPU | 8x GPU | Batch Size | |

| ResNet 50 | 2357.09 | 4479.18 | 8830.78 | 12481.2 | 512 |

| ResNet 152 | 988.9 | 1746.16 | 3036.46 | 5224.41 | 256 |

| Inception V3 | 1377.38 | 2639.79 | 4994.27 | 8117.57 | 512 |

| Inception V4 | 702.27 | 1318.51 | 2414.93 | 4305.89 | 256 |

NVIDIA A100 Deep Learning Benchmarks FP32

| 1x GPU | 2x GPU | 4x GPU | 8x GPU | Batch Size | |

| ResNet 50 | 853.09 | 1652.98 | 3152.71 | 5871.22 | 256 |

| ResNet 152 | 364.65 | 666.88 | 1192.32 | 2110.92 | 128 |

| Inception V3 | 587.8 | 1130.1 | 2175.39 | 4062.41 | 256 |

| Inception V4 | 289.94 | 539.5 | 1012.28 | 1835.12 | 128 |

NVIDIA A100 PCIe vs NVIDIA V100S PCIe FP16 Comparison

The NVIDIA A100 simply outperforms the Volta V100S with a performance gains upwards of 2x. These tests only show image processing, however the results are in line with previous tests done by NVIDIA showing similar performance gains.

| 4x NVIDIA A100 PCIe | 4x NVIDIA V100S | |

| ResNet 50 | 8830.78 | 3218 |

| ResNet 152 | 3036.46 | 1415.56 |

| Inception V3 | 4994.27 | 2161.02 |

| Inception V4 | 2414.93 | 1205.97 |

NVIDIA A100 PCIe vs NVIDIA V100S PCIe FP32 Comparison

As with the FP16 tests, the A100 handily outperforms the V100S by a factor of 2.

| 4x NVIDIA A100 PCIe | 4x NVIDIA V100S | |

| ResNet 50 | 3152.71 | 1432.69 |

| ResNet 152 | 1192.32 | 577.26 |

| Inception V3 | 2175.39 | 926.93 |

| Inception V4 | 1012.28 | 455.65 |

Benchmark System Specs

| System | Exxact AI Server |

| CPU | 2x AMD EPYC 7552 |

| GPU | NVIDIA A100 PCIe |

| System Memory | 512GB |

| Storage | 2x 480GB + 3.84TB |

| TensorFlow Version | NVIDIA Release 20.10-tf2 (build 16775790) TensorFlow Version 2.3.1 |

More Info and Specs About NVIDIA A100 PCIe GPU

NVIDIA A100 Tensor Core GPU delivers unprecedented acceleration and flexibility to power the world’s highest-performing elastic data centers for AI, data analytics, and HPC applications. As the engine of the NVIDIA data center platform, A100 provides massive performance upgrades over V100 GPUs and can efficiently scale up to thousands of GPUs, or be partitioned into seven isolated GPU instances to accelerate workloads of all sizes.

| Peak FP64 | 9.7 TF |

| Peak FP64 Tensor Core | 19.5 TF |

| Peak FP32 | 19.5 TF |

| Peak FP32 Tensor Core | 156 TF | 312 TF |

| Peak BFLOAT16 Tensor Core | 312 TF | 624 TF |

| Peak FP16 Tensor Core | 312 TF | 624 TF |

| Peak INT8 Tensor Core | 624 TOPS | 1,248 TOPS |

| Peak INT4 Tensor Core | 1,248 TOPS | 2,496 TOPS |

| GPU Memory | 40GB |

| GPU Memory Bandwidth | 1,555 GB/s |

| Interconnect | NVIDIA NVLink 600 GB/s PCIe Gen4 64 GB/s |

| Multi-Instance GPUs | Various instance sizes with up to 7 MIGs at 5GB |

| Form Factor | PCIe |

| Max TDP Power | 250 W |

Have any questions about NVIDIA GPUs or AI Servers?

Contact Exxact Today