Cryo-EM & the Emergence of Massive Datasets

With advancements in automation, compute power and visual technology, the scope and complexity of datasets used in Cryo-EM have grown substantially. However, various compute-intensive steps in single-particle Cryo-EM now take advantage of GPUs. In this blog, we will review some of the commonly used methods using RELION.

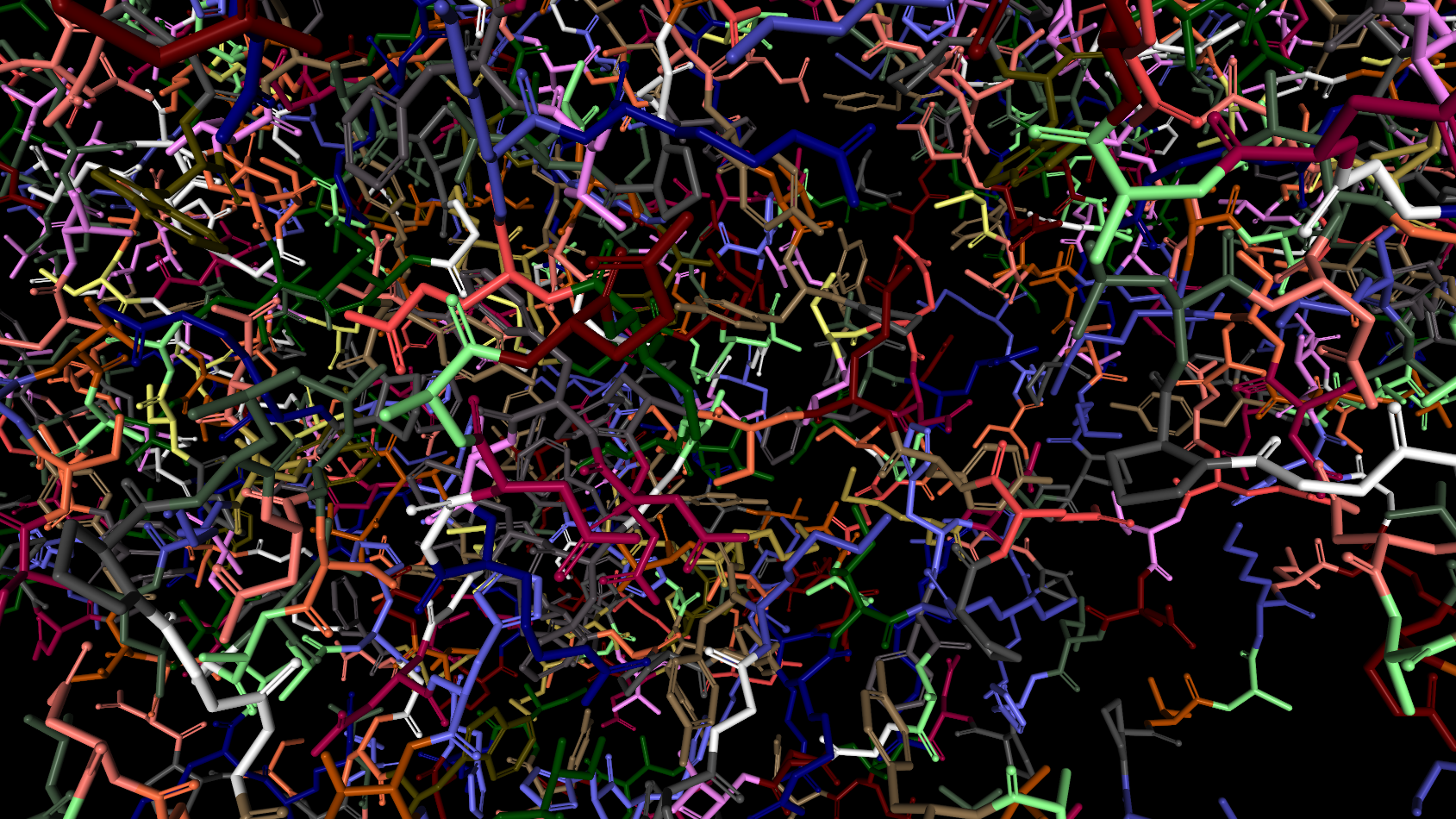

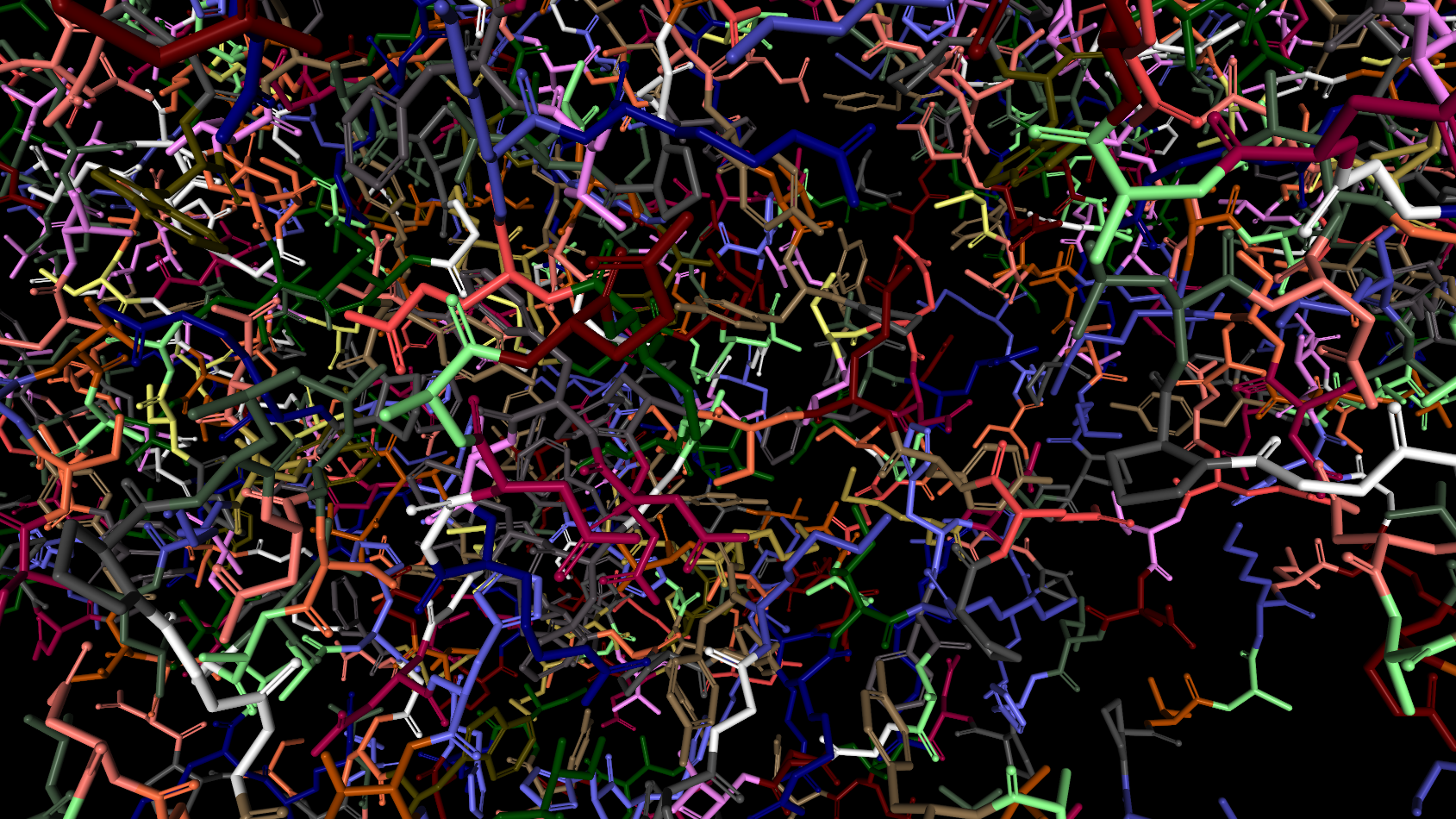

The requirements for processing this data have continued to increase, as for a typical experiment, it often takes ~2,000–10,000 files, captured from 1-10 Terabytes (TBs) of raw data, to generate high-resolution, single-particle maps as seen in the chart below.

For RELION-3, developers created a general code path where CPU algorithms have been rewritten to mirror GPU acceleration. This provides dual execution branches of the code that work very efficiently both on GPUs, as well as the single-instruction, multiple-data vector units present on traditional CPUs.

HPC Advanced Acceleration Options using MPI

Relion offers flexibility in its deployment and if you're familiar with MPI, you'll be happy to see the following options and the number of parallel MPI processes to run. The following MPI flags can be used accordingly:

| Flag | Description |

--j | The number of parallel threads to run on each CPU. |

--dont_combine_weights_via_disc | Messages will be passed through the network instead of reading/writing large files on disk. |

--gpu | Use GPU-acceleration. |

--cpu | Use CPU-acceleration. (commonly used on large CPU clusters.) |

--pool | This determines how many particles are processed together in a function call. ~10-50 for GPU jobs and 1 or 2x the threads for CPU jobs |

--no_parallel_disc_io | By default, all MPI slaves read their own particles Use to have master read all particles, then send through the network. |

--preread_images | By default, all particles are read from disk every iteration. If used, they are all read into RAM once, at the beginning of the job. |

--scratch_dir | By default, particles are read every iteration from the location specified in the input STAR file. By using this option, all particles are copied to a scratch disk, from where they will be read (every iteration) instead. Good if you don't have enough RAM to read but have large amount of SSD scratch disk(s) |

Advanced GPU Options for RELION Cryo-EM

Using the 'gpu' flag (without arguments), RELION will distribute processes over all available GPUs. This is the most common option if you're not sharing the system with others and running a single job. The following commands are assuming you have a 4-GPU RELION Workstation.

Run a single MPI process on each GPU:

mpirun -n 4 ‘which relion_autopick_mpi‘ --gpu |

Classification and refinement jobs where the master does not share the calculations performed on the GPU:

mpirun -n 5 ‘which relion_refine_mpi‘ --gpu |

Run multiple threads using the 'j' flag.

mpirun -n 5 ‘which relion_refine_mpi‘ --j 4 --gpu |

This may speed up calculations, without costing extra memory either on the system. In effect, this produces 4 working mpi-ranks, each with 4 threads.

How to Specify GPUs for Multi-Job & Multi-User Environments

This section shows advanced commands for accessing certain GPUs on multi-GPU setups.

- Use an argument to the --gpu option to provide a list of device-indices.

- The syntax is then to delimit ranks with colons [:], and threads by commas [,]

The following rules apply:

- If a GPU id is specified more than once for a single mpi-rank, the GPU will be assigned proportionally more of the threads of that rank.

- If no colons are used, then the GPUs specified apply to all ranks.

- If GPUs are specified for more than one rank (but not all) the unrestricted ranks are assigned the same GPUs as the restricted ranks.

To use two of the four GPUs for all mpi-ranks, you can specify:

mpirun -n 3 ‘which relion_refine_mpi‘ --gpu 2:3 |

slave 1 is told to use GPU2. slave 2 is told to use GPU3. For example if you want to leave another two free for a separate user/job, then (by the above rule 2)

To even spread over ALL GPUs, don't specify selection indices, as RELION will handle this itself. On a 4-GPU machine run:

mpirun -n 3 ‘which relion_refine_mpi‘ --gpu |

slave 1 will use GPU0 and GPU1 for its threads. slave 2 will use GPU2 and GPU3 for its threads

Schedule individual threads from MPI processes on the GPUs. Good when available RAM is a limitation. In this example run 3 MPI processes, as follows:

mpirun -n 3 ‘which relion_refine_mpi‘ --j 4 --gpu 0,1,1,2:3 |

slave 1 is told to put thread 1 on GPU0, threads 2 and 3 on GPU1, and thread 4 on GPU2. slave 2 is told to put all 4 threads on GPU3.

Below is a complex example of the full functionality of the GPU specification options.

mpirun -n 4 ... -j 3 --gpu 2:2:1,3 |

slave 1 w/ 3 threads on GPU2, slave 2 w/ 3 threads on GPU2, slave 3 distributes 3 threads as evenly as possible across GPU1 and GPU3.

Other Molecular Dynamics Related Blogs:

GPU Accelerated Cryo-EM using RELION: From Workstation to Supercomputer

Cryo-EM & the Emergence of Massive Datasets

With advancements in automation, compute power and visual technology, the scope and complexity of datasets used in Cryo-EM have grown substantially. However, various compute-intensive steps in single-particle Cryo-EM now take advantage of GPUs. In this blog, we will review some of the commonly used methods using RELION.

The requirements for processing this data have continued to increase, as for a typical experiment, it often takes ~2,000–10,000 files, captured from 1-10 Terabytes (TBs) of raw data, to generate high-resolution, single-particle maps as seen in the chart below.

For RELION-3, developers created a general code path where CPU algorithms have been rewritten to mirror GPU acceleration. This provides dual execution branches of the code that work very efficiently both on GPUs, as well as the single-instruction, multiple-data vector units present on traditional CPUs.

HPC Advanced Acceleration Options using MPI

Relion offers flexibility in its deployment and if you're familiar with MPI, you'll be happy to see the following options and the number of parallel MPI processes to run. The following MPI flags can be used accordingly:

| Flag | Description |

--j | The number of parallel threads to run on each CPU. |

--dont_combine_weights_via_disc | Messages will be passed through the network instead of reading/writing large files on disk. |

--gpu | Use GPU-acceleration. |

--cpu | Use CPU-acceleration. (commonly used on large CPU clusters.) |

--pool | This determines how many particles are processed together in a function call. ~10-50 for GPU jobs and 1 or 2x the threads for CPU jobs |

--no_parallel_disc_io | By default, all MPI slaves read their own particles Use to have master read all particles, then send through the network. |

--preread_images | By default, all particles are read from disk every iteration. If used, they are all read into RAM once, at the beginning of the job. |

--scratch_dir | By default, particles are read every iteration from the location specified in the input STAR file. By using this option, all particles are copied to a scratch disk, from where they will be read (every iteration) instead. Good if you don't have enough RAM to read but have large amount of SSD scratch disk(s) |

Advanced GPU Options for RELION Cryo-EM

Using the 'gpu' flag (without arguments), RELION will distribute processes over all available GPUs. This is the most common option if you're not sharing the system with others and running a single job. The following commands are assuming you have a 4-GPU RELION Workstation.

Run a single MPI process on each GPU:

mpirun -n 4 ‘which relion_autopick_mpi‘ --gpu |

Classification and refinement jobs where the master does not share the calculations performed on the GPU:

mpirun -n 5 ‘which relion_refine_mpi‘ --gpu |

Run multiple threads using the 'j' flag.

mpirun -n 5 ‘which relion_refine_mpi‘ --j 4 --gpu |

This may speed up calculations, without costing extra memory either on the system. In effect, this produces 4 working mpi-ranks, each with 4 threads.

How to Specify GPUs for Multi-Job & Multi-User Environments

This section shows advanced commands for accessing certain GPUs on multi-GPU setups.

- Use an argument to the --gpu option to provide a list of device-indices.

- The syntax is then to delimit ranks with colons [:], and threads by commas [,]

The following rules apply:

- If a GPU id is specified more than once for a single mpi-rank, the GPU will be assigned proportionally more of the threads of that rank.

- If no colons are used, then the GPUs specified apply to all ranks.

- If GPUs are specified for more than one rank (but not all) the unrestricted ranks are assigned the same GPUs as the restricted ranks.

To use two of the four GPUs for all mpi-ranks, you can specify:

mpirun -n 3 ‘which relion_refine_mpi‘ --gpu 2:3 |

slave 1 is told to use GPU2. slave 2 is told to use GPU3. For example if you want to leave another two free for a separate user/job, then (by the above rule 2)

To even spread over ALL GPUs, don't specify selection indices, as RELION will handle this itself. On a 4-GPU machine run:

mpirun -n 3 ‘which relion_refine_mpi‘ --gpu |

slave 1 will use GPU0 and GPU1 for its threads. slave 2 will use GPU2 and GPU3 for its threads

Schedule individual threads from MPI processes on the GPUs. Good when available RAM is a limitation. In this example run 3 MPI processes, as follows:

mpirun -n 3 ‘which relion_refine_mpi‘ --j 4 --gpu 0,1,1,2:3 |

slave 1 is told to put thread 1 on GPU0, threads 2 and 3 on GPU1, and thread 4 on GPU2. slave 2 is told to put all 4 threads on GPU3.

Below is a complex example of the full functionality of the GPU specification options.

mpirun -n 4 ... -j 3 --gpu 2:2:1,3 |

slave 1 w/ 3 threads on GPU2, slave 2 w/ 3 threads on GPU2, slave 3 distributes 3 threads as evenly as possible across GPU1 and GPU3.