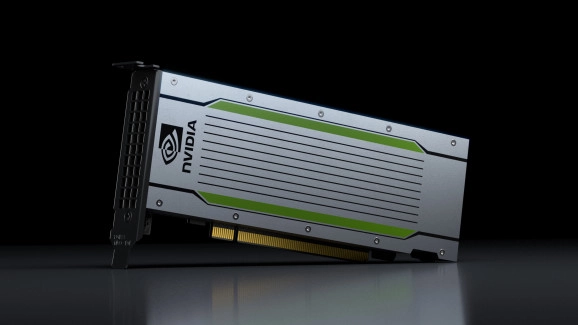

Introducing the New NVIDIA Tesla T4 GPU

At GTC Japan, NVIDIA announced its latest Turing-based GPU for machine learning and inferencing in the data center. The new Tesla T4 GPUs, which leverages the same Turing microarchitecture as the latest GeForce RTX 20-series gaming graphics cards, is its fastest data center inferencing platform yet.

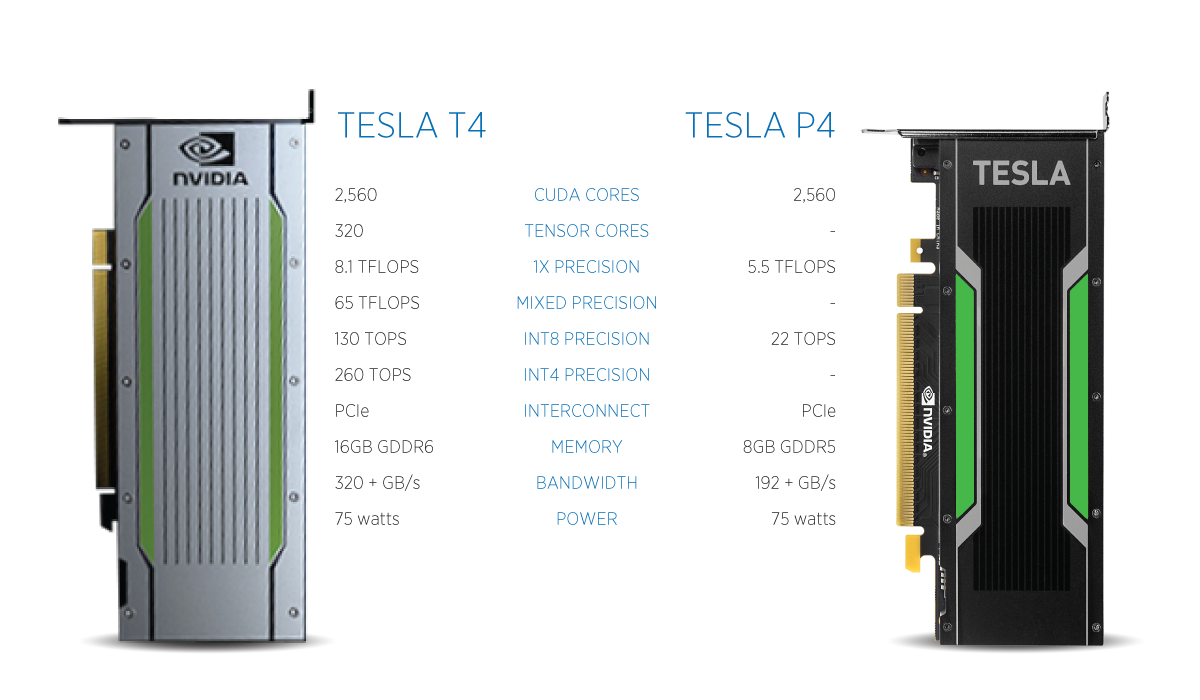

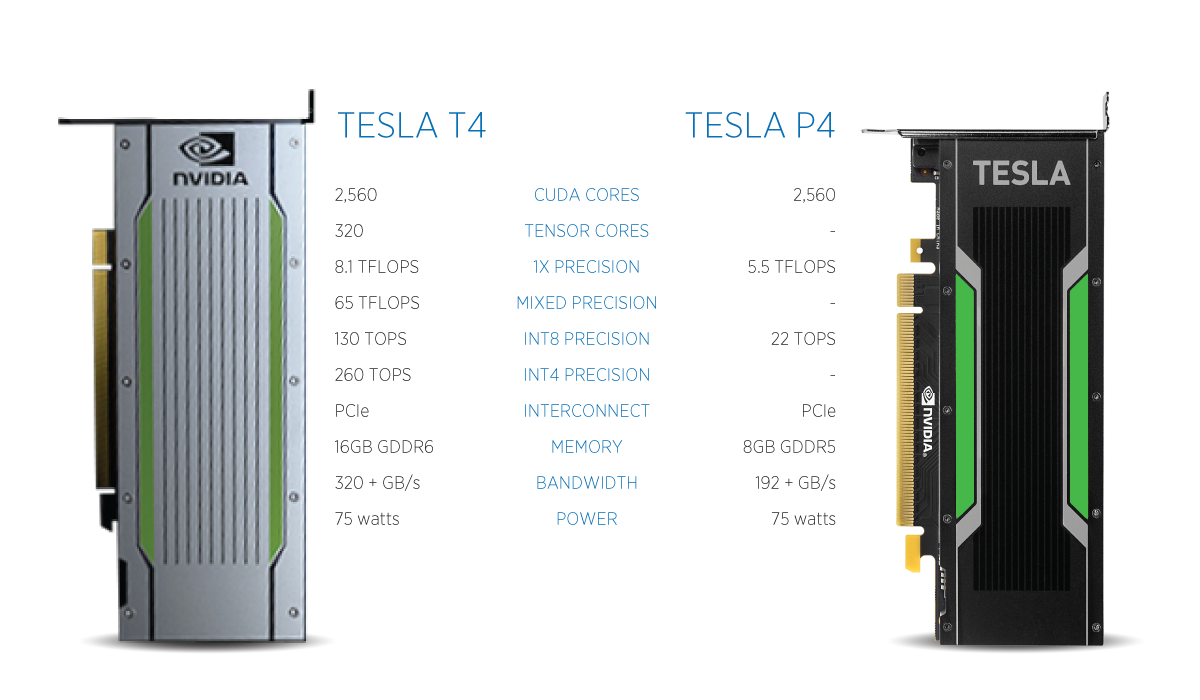

As NVIDIA states, "The NVIDIA Tesla T4 GPU is the world's most advanced inference accelerator." NVIDIA claims the new Tesla T4 GPUs are up to 12x faster than the previous Tesla P4s, potentially setting a new bar for power efficiency in inference workloads. The Tesla T4 is packaged in an energy-efficient 75-watt, small PCIe form factor and is optimized for scale-out servers.

Powering the TensorRT Hyperscale Inference Platform

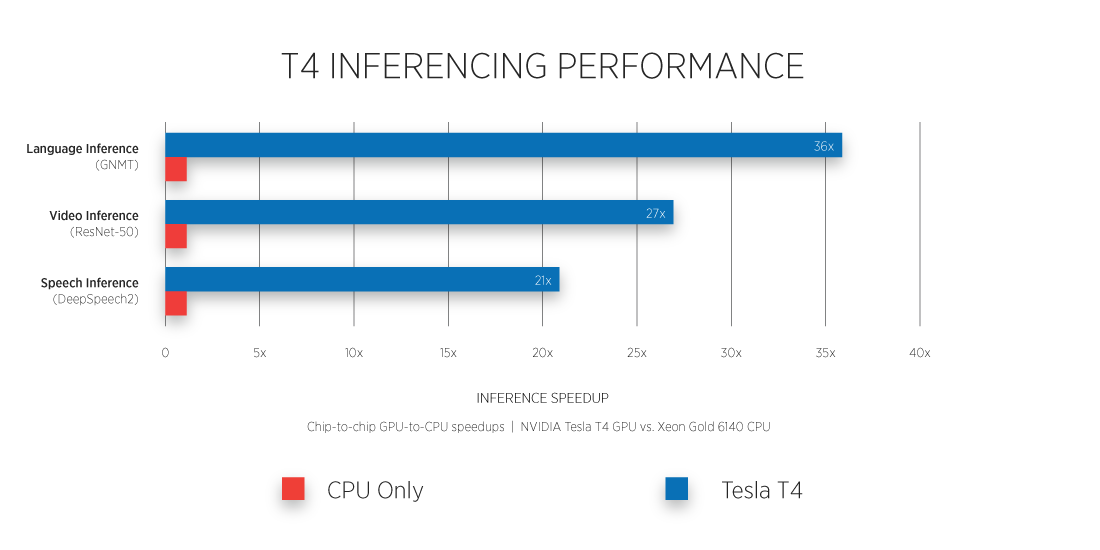

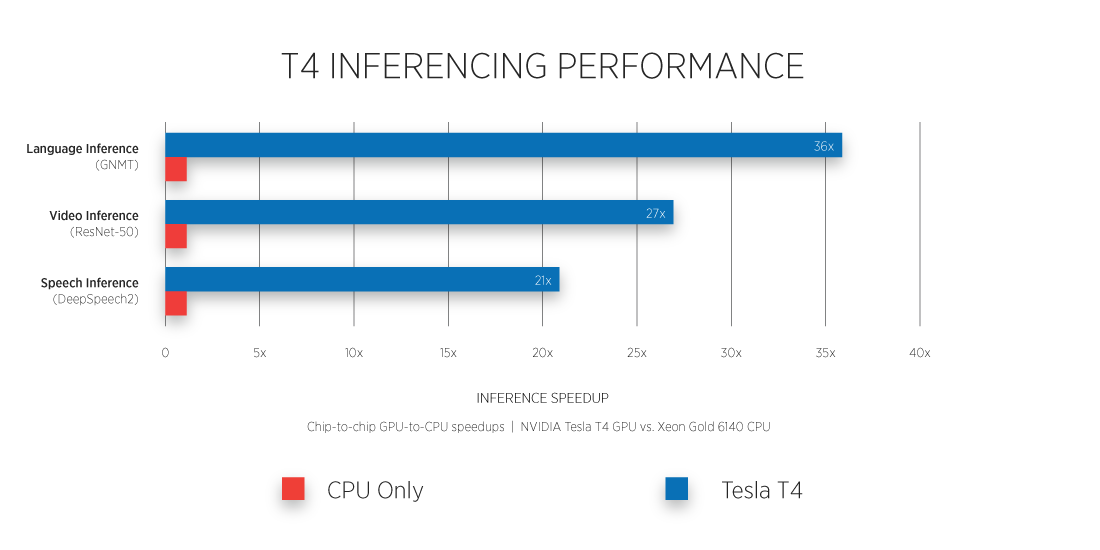

The TensorRT Hyperscale Inference Platform is designed to accelerate inferences made from voice, images, and video. Every single day, massive data centers process billions of images, videos, translations, voice queries, and social media interactions. Each one of these applications requires a different type of neural network residing on the server where the processing takes place. In order to optimize the data center for maximum throughput and server utilization, the NVIDIA TensorRT Hyperscale Platform includes both real-time inference software and Tesla T4 GPUs, which are able to process queries up to 40x faster than CPUs.

Designed Specifically For AI Inferencing

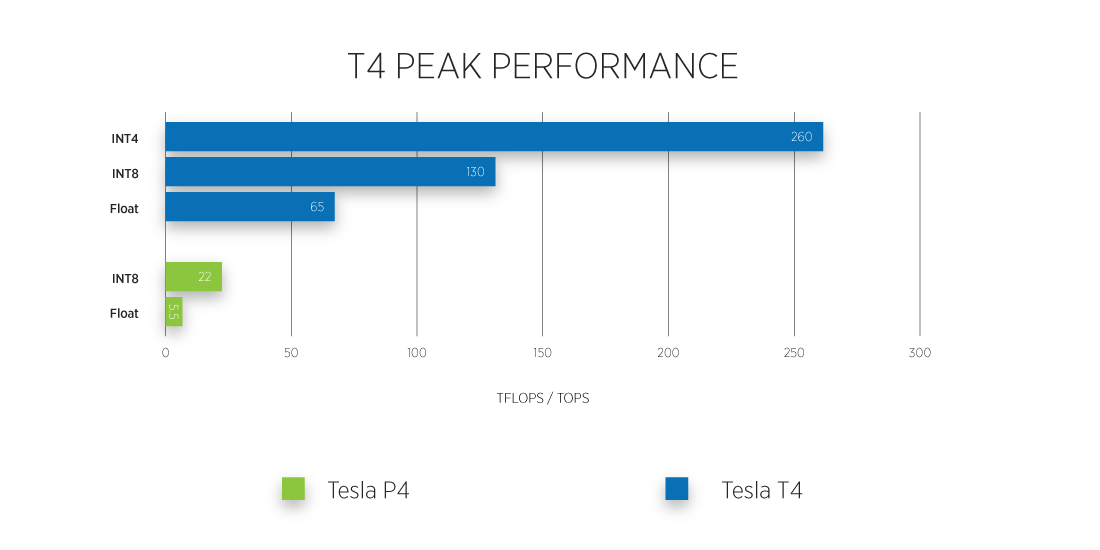

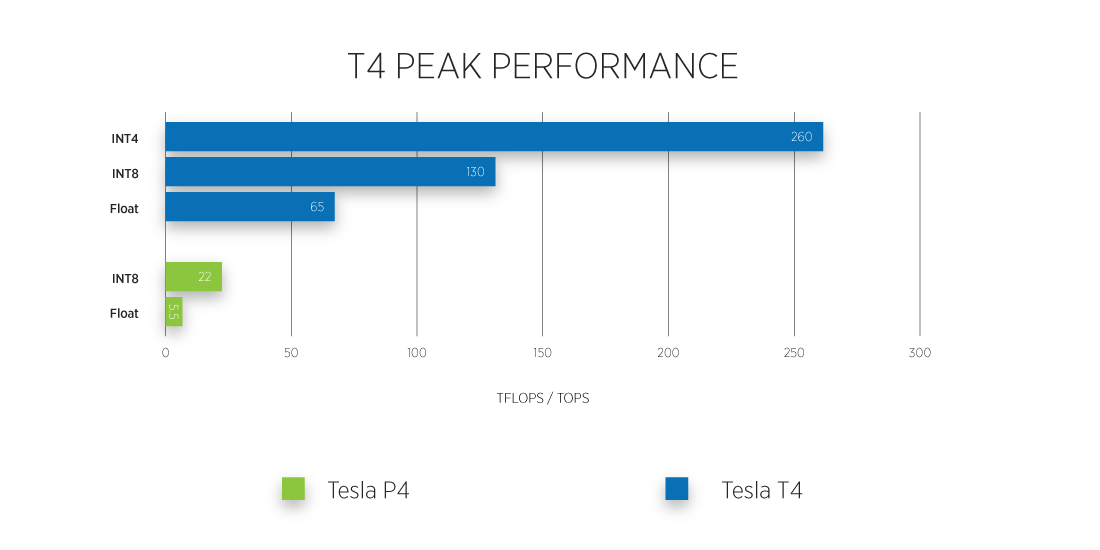

Accelerating applications for Modern AI, the T4 is powered by 320 Turing Tensor Cores and 2,560 CUDA cores, the Tesla T4 brings revolutionary multi-precision inference performance, from FP32 to FP16 to INT8, and INT4. It offers 65 teraflops of peak performance for FP16, 130 TOPS for INT8, and 260 TOPS for INT4.

What are your thoughts on the Tesla P4 GPU? Let us know in the comments below or on social media! Have any questions? Contact us directly here.

NVIDIA Announces New Tesla T4 on Turing GPU Architecture

Introducing the New NVIDIA Tesla T4 GPU

At GTC Japan, NVIDIA announced its latest Turing-based GPU for machine learning and inferencing in the data center. The new Tesla T4 GPUs, which leverages the same Turing microarchitecture as the latest GeForce RTX 20-series gaming graphics cards, is its fastest data center inferencing platform yet.

As NVIDIA states, "The NVIDIA Tesla T4 GPU is the world's most advanced inference accelerator." NVIDIA claims the new Tesla T4 GPUs are up to 12x faster than the previous Tesla P4s, potentially setting a new bar for power efficiency in inference workloads. The Tesla T4 is packaged in an energy-efficient 75-watt, small PCIe form factor and is optimized for scale-out servers.

Powering the TensorRT Hyperscale Inference Platform

The TensorRT Hyperscale Inference Platform is designed to accelerate inferences made from voice, images, and video. Every single day, massive data centers process billions of images, videos, translations, voice queries, and social media interactions. Each one of these applications requires a different type of neural network residing on the server where the processing takes place. In order to optimize the data center for maximum throughput and server utilization, the NVIDIA TensorRT Hyperscale Platform includes both real-time inference software and Tesla T4 GPUs, which are able to process queries up to 40x faster than CPUs.

Designed Specifically For AI Inferencing

Accelerating applications for Modern AI, the T4 is powered by 320 Turing Tensor Cores and 2,560 CUDA cores, the Tesla T4 brings revolutionary multi-precision inference performance, from FP32 to FP16 to INT8, and INT4. It offers 65 teraflops of peak performance for FP16, 130 TOPS for INT8, and 260 TOPS for INT4.

What are your thoughts on the Tesla P4 GPU? Let us know in the comments below or on social media! Have any questions? Contact us directly here.